Let's explore how to use Ollama on a local machine via the command line (CLI), without writing any code, and discuss the advantages of running LLMs locally using only CPUs.

Why run LLMs locally on your CPU?

Running LLMs on a CPU offers several key benefits,

especially when you do not have access to high-end GPUs:

1. Cost Efficiency

- Cloud-based

APIs can incur significant costs, particularly if you're running models

regularly. By running the model locally, you eliminate the costs.

2. Privacy and Security

- Keeping

all data processing local ensures that sensitive information doesn’t have

to leave your machine, protecting your privacy and offering full control

over your data.

3. Flexibility and Control

- Running

an LLM locally on your machine gives you the freedom to customize it for

specific use cases without being constrained by cloud service terms or API

limitations. You can use it in your preferred workflow and modify it as

needed.

4. Zero Latency

- By

using a local installation, you avoid delays that come from network calls

to cloud services, giving you near-instant access to the model.

Ollama

Ollama is a simple, user-friendly tool that allows you

to run pre-trained language models locally on your machine. Ollama is optimized

for both CPU and GPU usage, meaning you can run it even if your

machine doesn’t have powerful GPU hardware. It abstracts the complexity of

setting up models and running them, offering a clean command-line interface

(CLI) that makes it easy to get started.

Setting up Ollama:

Step 1:

- Visit

the Ollama website to

download the installer for your platform (Windows, macOS, or Linux).

- Follow

the installation instructions provided for your operating system.

Once

installed, you’ll have access to the ollama command directly in your

terminal.

Step 2:

- Open the terminal and check the ollama installation with the below command

ollama --version

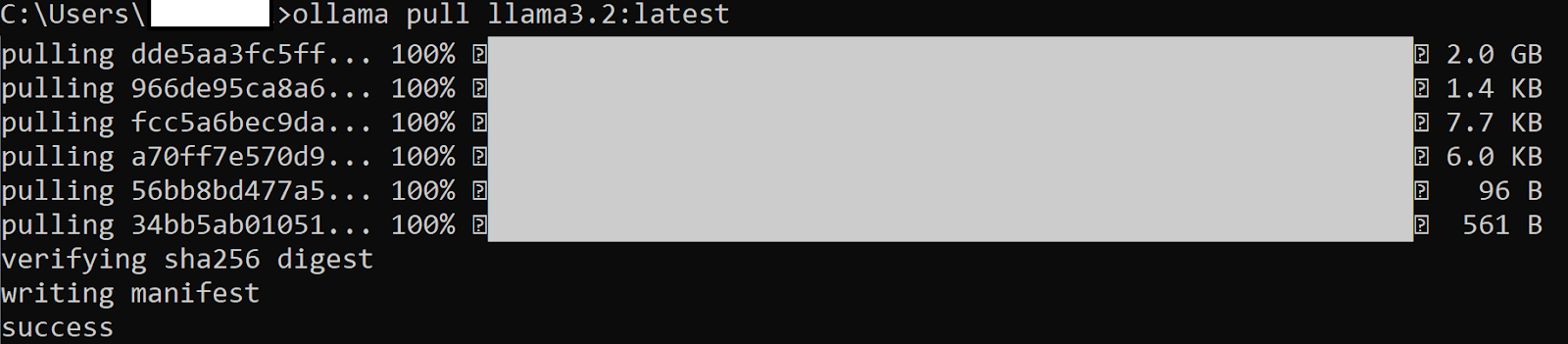

This confirms that ollama is installed properly.- Pull any locally deployable model like llama 3.2

ollama pull llama3.2:1b

- List the models downloaded in the local system

ollama list

- Run the model and start the conversation with ollama

ollama run modelname

Useful Ollama Commands:

Comamnd

Description

ollama serve

Starts Ollama on your local system.

ollama show

Displays details about a specific model, such as its configuration and release date

ollama run

Downloads the specified model to your system.

ollama list

Lists all the downloaded models

ollama ps

Shows the currently running models

ollama stop

Stops the specified running model

ollama pull

Pulls the specified model to the local system

ollama rm

Removes the specified model from your system

bye

Exit the ollama conversation

Challenges and Limitations of running LLMs locally on CPUs

While running LLMs locally with Ollama has many advantages,

there are some limitations to keep in mind:

- Performance on CPUs: Running large models on CPUs can be slower than using GPUs. Although Ollama is optimized for CPUs, you may still experience slower response times, especially with more complex tasks.

- Memory Usage: LLMs can consume a lot of memory, and running them locally may require a significant amount of RAM. Ensure that your machine has at least 16 GB of RAM for decent performance. Larger models will require more memory, which could lead to slowdowns or crashes on systems with limited resources.

- Model Size: Some larger models, such as GPT-3, may not be practical to run on a CPU due to their massive size and resource requirements. Ollama offers smaller models that are more feasible to run on CPU-based machines, but for the largest models, you may still need a GPU for optimal performance.

Conclusion:

Ollama makes running LLMs locally on a CPU simple and accessible, offering an easy-to-use CLI for tasks like text generation and question answering, without needing code or complex setups. It lets you run models efficiently on various hardware, providing privacy, cost savings, and full data control.